Have you gotten this message?

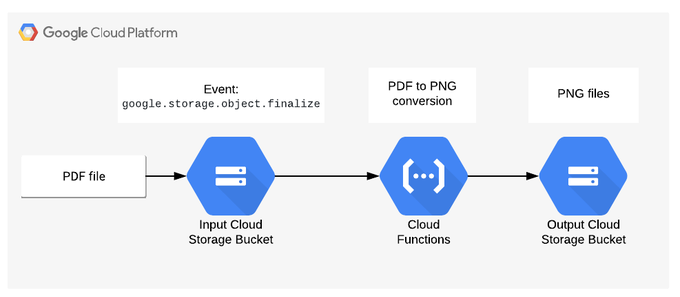

Google Cloud is designed to run each endpoint in a separate ‘container’. This makes it quite easy to scale but thinking about how to manage connections to external resources and still achieve top performance becomes a new challenge.

In each container, all of the dependent resources are initialized and connected. These connections can be held open — thanks to the way google implemented the containers. The draw back is that if there are 500 endpoints it is easy to rack up the held connections and even more so across regions and when utilizing multiple connections to the hosts. Let me ask, when was holding 1000’s of open connections to mysql a good idea? However, if we close and open connections we lose the optimizations of reusing connections and performance plummets.

Each container can open and maintain a db connection

What do we do?

There are a few ways to combat this situation — limiting the number of endpoints that initialize the db and create connections, open and close connections before and after each call, and others as well.

But, I’d like to focus on one particular solution – creating a httpsCallable function. Specifically for connecting to the db and calling it from another cloud function or functions.

Wait … what was that? Yes, call a cloud function from another cloud function.

Call a cloud function from another cloud function

It may not be readily apparent that this solution decreases coupling, increases isolation, eases management for access to database functions, increases control over scaling, and simplifies connection management.

This solution worked well for me since I was using a cost efficient db resources with a limited number of simultaneous connections. With a single container managing all of the connections I could control exactly the number of connections I was maintaining.

If you have heard of netflix java api there’s a pretty hefty setup cost for trying to call functions from functions. But the google api is really straight forward and once you learn the magic it’s as easy as “Alakazam.” Unfortunately, this is not a well documented interface and the information on the web is outdated and didn’t work.

The idea itself is quite simple. Create a httpsCallable endpoint that is only called from other functions.

The ingredients to make this work are – a httpsCallable endpoint, the context from the caller, the auth token from the caller and a carefully crafted https POST request.

Let’s get started.

httpsCallable functions look very similar to an rpc. Literally functionName(arguments) is as simple as it can be. What’s really nice is httpsCallable’s are authenticated, the additional cors validation is handled, and it’s a simple return <data> or throw <message> no messy status codes, no conversion to strings, it’s taken care of automatically. Less code is better, right?

Less code is better, right?

To start, I’m assuming that you know how to setup google firebase, follow the link for a fresher. Here we only use a trivial example to show how it can be done.

In functions/index.js create an export like below — the name <crud> is what you’ll be referencing, as we’ll see later.

// added to index.js

let _dbmodule;

exports.crud = functions.https.onCall(async (data, context) => {

if(!_dbmodule) { _dbmodule = require(“./db”); } // some database moduleconst [op,…rest] = data;

try { return await _dbmodule[op](…rest) }

catch (e) { throw e }

}

This function is what will be called from the other functions. To keep from loading or connecting to the database in every function we require the dependencies inside of the function. First time through _dbmodule will be undefined, after that it’s initialized and we won’t call the require again.

Most often we would use a CRUD interface (but there are other methods as well CQRS, etc). In this case we’ll assume CRUD and then ‘op’ would be: ‘create’, ‘read’, ‘update’, ‘delete’. Arguments for create would be the new data; read, an id; update, an id and the new data; delete, an id (remember to use a soft delete if your app references other table data).

That db interface file might look like the following: (i.e. db.js)

// ‘db’

export default {

create: async (data) => await db.create(data),

read: async (id) => await db.query({id}),

update: async (id,data) => await db.update({id,$set:data}),

delete: async(id) => await db.update({id,$set:{deleted:1}})

}

With those two pieces in the bag let’s look at the tricky part, calling the httpsCallable function from your other function.

The module to do the dirty work of creating and calling the httpsCallable has to follow the exact protocol specification, if not your day will not be fun. In this example, a closure is used to simplify the function signature and to adhere with the ‘no more than 3 parameters’ rule but there are other implementations.

// ‘httpsCallable’

import axios from ‘axios’;module.exports = function(name,context) {

const {protocol,headers} = context.rawRequest;

const host = headers[‘x-forwardedfor-host’] || headers.host;

// there will be two different paths for

// production and development

const url = `${protocol}://${host}/${name}`;

const method = ‘post’;

const auth = headers.authorization;

return (…rest) => {

const data = JSON.stringify({data:rest});

const config = {

method, url, data,

headers: {

‘Content-Type’: ‘application/json’,

‘Authorization’: auth,

‘Connection’: ‘keep-alive’,

‘Pragma’: ‘no-cache,

‘Cache-control’: ‘no-cache’,

}

};

try {

const {data:{result}} = await axios(config);

return result;

} catch(e) {

throw e;

}

}

}

Let’s break down this code.

protocol – you know this one already http or https

host – this is the host being used by the caller since we are expecting to be calling into the same region we can grab this and reuse. It can also point to a load balancer if you are using one.

url – the generated url. The format of the url should be as follows ‘<protocol>//<region>-<project_id>.cloudfunctions.net/<name>’

method – the google documentation requires that these calls have to be a post

auth – This is the authorization ‘bearer’ token that came from the caller, it’s an encoded version of the JWT which will be validated by the httpsCallable engine.

Moving right along, our closure can be called directly and passed the arguments. The 3 key pieces you need to be aware of are the following:

1. data – using JSON.stringify on the data as an object with a data key. A requirement for the httpsCallable endpoint.

2. ‘Content-type’ header – This allows the httpsCallable engine to parse the input so it can be directly used.

3. ‘Authorization’ header – Must be the JWT token for the user, allows the caller access to the cloud function.

Now let’s look at how to use that module in a different httpsCallable cloud function. The function can be as simple or as complex as needed but in our case we are just forwarding a ‘named’ entry point to the single generic database endpoint.

// added to index.js

let _httpsCallable;

exports.getUsers = functions.https.onCall(async (data, context) => {

if(!_httpsCallable) _httpsCallable = require(‘./httpsCallable’);

const crud = _httpsCallable(‘crud’,context);

try {

return await crud(‘read’,…data);

} catch (e) {

return undefined;

}

})

Once again to make sure we are not creating extra load on the cold start we check and cache our httpsCallable module.

Now it’s getting interesting. We create a closure which when called with our arguments will fire off a request to our crud httpsCallable function.

Simple.

Object

Let’s talk negatives. Calling another cloud function increases the cold-start delay, which if the module is implemented poorly can be up to 5 seconds! However, above I’ve put in some suggested practices that reduce that time. Another concern is an extra level of indirection which can be difficult to wrap your mind around as a new team member. And since it’s a different interface there’s some extra care to be taken when implementing changes to the cloud function.

You should use this pattern when: needing to manage remote connections in a single container, limit the number of simultaneous connects to an external resource — independent of the number of endpoints, or you need to limit direct access to the database api for security reasons.

In my implementation I added a switch that allows me to enable the remote endpoint or handle the calls directly, this helped me to understand the differences between the implementations and see performance differences.

I hope you found this helpful and can utilize this technique in your projects as well.

See you on the flip side.